1. Introduction

Rapid progress in AI technologies has generated considerable interest in their potential to address challenges in every field and education is no exception. Improving learning outcomes and providing relevant education to all have been dominant themes universally, both in the developed and developing world. And they have taken on greater significance in the current era of technology driven personalization.

Learning outcomes have however stagnated in the U.S. and have remained relatively low in the developing world. In their 2020 annual letter, Bill and Melinda Gates point out that despite spending billions they have not seen the kind of progress they expected with learning outcomes in k-12 education in public schools (CNBC, 2020). In India, each year, the Annual Status of Education report reports in India’s public schools, a 5th grader is unable to read 2nd grade text (ASER, 2019). Recently, the Central Square Foundation (Central Square Foundation, 2020) found that learning outcomes in private schools in India, while better compared to public schools, are still poor – 35% of rural private school students in Grade 5 cannot read a grade 2 level paragraph.

Lack of equity in education has been a contributing factor for poor learning outcomes. In the U.S., a 2013 report by the Equity and Excellence Commission of the U.S. Dept of Education reported that “some young Americans – most of them white and affluent – are getting a world-class education” while those who “attend schools in high poverty neighborhoods are getting an education that more closely approximates schools in developing nations.” Compounding the challenges in providing equitable access to educational opportunities is a new generation of learners, with highly differentiated educational needs. This includes displaced and differently abled learners (such as immigrants as well as those underemployed or seeking career changes, gig-workers, veterans).

Over the last couple of decades, there has also been significant advancement in our knowledge of how we learn. Studies have determined that several practices, loosely referred to as learning science, if systematically implemented, can aid learners in optimizing their learning potential. However, these practices have not yet found their way into educational curriculums in a systematic fashion. It has been largely up to individual teachers to adapt them as they saw the fit based on their experience.

Another macro trend impacting learning in general has been the evolution of the role of the teacher in learning. From being the sole influence on learning, digital technologies and AI are transitioning the teacher to being more of a facilitator and coach than being the singular source for learning. In his far sighted foreword, John Seely Brown (Iiyoshi & Vijay Kumar, 2010) recognizes that all learning does not happen inside the classroom arguing forcefully for blurring the distinction between formal learning [classrooms] and informal learning [outside]. He prophesized three influences as shaping the future of education – the digital age, social learning and technology. The impact of these influences are very relevant today.

The coronavirus pandemic we are living through will likely forever alter how the various stakeholders in education interact going forward. Approximately, 1.5 billion learners and 90 million teachers were forced to leave the physical classroom (UNESCO, 2020) for a protracted period. Almost overnight, most educational institutions were forced to go online and have had to remain either in full remote or hybrid learning modes for 18 months or so. The forced transition to remote learning has revealed certain benefits, created interesting challenges and caused changes in behavior like greater willingness to adopt AI technologies. The challenges related to equity in education have only got exacerbated and come into sharper focus. All of a sudden parents have had to become para educators. The need for innovative solutions for delivering high-quality education remotely at speed and scale has never been greater.

We believe AI technology has the potential to help the world address these chronic challenges in education relating to equity, learning outcomes, and real world relevance. In this paper, we describe a preferred future for the role of AI in learning. Our vision of a preferred future is manifest in a comprehensive AI-first solution framework for learning with the objectives of scaling quality education affordably, optimizing the learning potential of individual learners, enabling teachers to be more effective and parental involvement to have a positive impact.

2. Literature review

In this section, we review prior research in a number of relevant areas. We review specific research related to AI in Education (AIED), the changing role of the teacher, parental involvement, the neurobiology of learning and the constructivist theory of education. In arriving at a preferred future, we believe a holistic view encompassing all these dimensions is critically important.

2.1. AI in learning

Baker and Smith (2019) categorize AIED applications into 3 categories: (i) learner-oriented AIED; (ii) instructor-oriented AIED; and (iii) institutional system-oriented AIED. However often applications that focus on learning cut cross several stakeholders especially in the current context and impact a common objective – learning. They are likely to reflect a collaboration among teachers, learners, parents, tutors and peers. We prefer the following modified categorization:

- learning-oriented, including learners, teachers, educational institutions, tutors, peers, parents and para educators;

- institutional operations oriented financial aid, retention, learner experience;

- policy oriented including governmental interventions, subsidies, competitive assessments like MCAS in the U.S.

Our focus in this paper is on learning-oriented applications in AIED. We use AIED and ‘AI in Learning’ synonymously in the remainder of the paper.

Guan et al. (2020) summarize the various definitions of AIED in prior research:

| Authors | Definition |

|---|---|

| Hwang (2003) | Intelligent tutoring system that helps to organize system knowledge and operational information to enhance operator performance and automatically determining exercise progression and remediation during a training session according to past student performance. |

| Johnson et al. (2009) | Artificially intelligent tutors that construct responses in real-time using its own ability to understand the problem and assess student analyses. |

| Popenici and Kerr (2017) | Computing systems that are able to engage in human-like processes such as learning, adapting, synthesizing, self-correction and use of data for complex processing tasks. |

| Chatterjee and Bhattacharjee (2020) | Computing systems capable of engaging in human-like processes such as adapting, learning, synthesizing, correcting and using of various data required for processing complex tasks. |

The first two definitions of AIED above are focused on intelligent tutoring and the last two are slightly broader but still focused on the learner.

Based on an analysis of over 400 research articles published between 2000 and 2019 on the application of AI for teaching and learning, they find shifts in research emphasis between the first and second decade. During the period 2000–2009, the emphasis was on learner-oriented approaches trending away from instructor-oriented approaches. In the second decade 2010–2019, they found that there was a noticeable shift towards modeling learning outcomes/analytics and student profiling. Their findings reflect the growing interest in explicit measurement of learning outcomes, and predictive AI models including the use of deep statistical learning.

Xie at al (2019) review trends and developments in technology-enhanced adaptive/personalized learning (PLL) by reviewing related journal articles in the decade 2007–2017. Of particular note is Chen et al. (2021) who found that learners’ gender, cognitive styles, and prior knowledge can determine if personalized learning systems will be effective. Another notable finding is by Tseng et al. (2008) who found that the integration of learning styles and learning behaviors can be used to determine the appropriate learning materials for the learner. Xie et al. (2019) identify wearable personal learning technologies, collaborative and immersive personalized learning using virtual reality as areas for future applications of adaptive/personalized learning.

Based on a review of 220 research articles on personalized language learning, Chen et al. (2021) find that (1) multimodal videos promote effective personalized feedback; (2) personalized context-aware ubiquitous language learning enables active interaction with the real world by applying authentic and social knowledge to their surroundings; (3) mobile chatbots with Automated Speech Recognition (ASR) provide human-like interactive learning experiences to practice speaking and pronunciation; (4) collaborative game-based learning with customized gameplay path and interaction and communication features supports social interactivity and learning of various language skills; (5) AI promotes effective outcome prediction and instruction adaptation for individuals based on massive learner data; (6) Learning Analytics (LA) dashboards facilitate personalized recommendations through learning data visualization; and (7) data driven learning allows personalized and immediate feedback in real-time practice. Generally, these findings validate that AI technology can be beneficial in personalized language learning.

In a review of AI supported eLearning (AIeL) research, Tang et al. (2021) found that several of the studies analyzed had reported positive impact of AI on e-learning participants’ learning performance or perceptions. They found that a large portion of AIeL applications are in the form of intelligent learning systems, which provide personal guidance or feedback based on individual students’ learning status. They suggest that prediction of students’ learning characteristics by collecting and analyzing their online learning behavior and performance was a ripe area for research. Moreover, from the perspective of the follow-up studies, it is concluded that developing adaptive learning environments and providing personalized learning supports based on the students’ learning characteristics are important trends in AIeL research.

Hwang (2014) define the criteria for ‘smart learning’ environments. Smart learning environments are defined as enabling learners to access digital resources and interact with learning systems in any place and at any time, and also actively provide the necessary learning guidance, hints, supportive tools or learning suggestions to them in the right place, at the right time and in the right form. The study presents a framework to address the design and development considerations of such smart learning environments to support both online and real-world learning activities. While the framework has several valuable elements, it is still focused on an appropriate context-aware learning environment beyond a purely adaptive learning environment. It does not provide an operational technology blueprint for AIED.

In an important contribution, Chen et al. (2020) conducted an review of 45 influential articles and also evaluated definitions of AIEd from broad and narrow perspectives and clarified the relationship among AIEd, Educational Data Mining, Computer-Based Education, and Learning Analytics. The findings relevant to this paper are that little work had been conducted to bring deep learning technologies into educational contexts; natural language processing (NLP) technologies were commonly adopted in educational contexts, while more advanced techniques were rarely adopted and there was a lack of studies that both employ AI technologies and engage deeply with educational theories. Their recommendations are to seek the potential of applying AI in physical classroom settings, spare efforts to recognize detailed entailment relationships between learners’ answers and the desired conceptual understanding within intelligent tutoring systems; pay more attention to the adoption of advanced deep learning algorithms such as generative adversarial network and deep neural network; seek the potential of NLP in promoting precision or personalized education; combine biomedical detection and imaging technologies; and closely incorporate the application of AI technologies with educational theories. We agree with many of their recommendations but have to issue a word of caution about the one suggesting greater adoption of deep learning technologies.

The above reviews provide valuable summaries of the progress AIED has made, especially in personalized and adaptive learning. Broadly the findings support the hypothesis that personalization makes a difference when learner characteristics and differences are carefully considered. The trend towards more explicit measurement of learning outcomes is healthy and much needed. In incorporating learning styles, extant research with the exception of Srinivasan and Murthy (2021) does not consider the beneficial impact of engaging multiple sensory channels. The extant research also does not explicitly propose a generalized framework for integrating findings from learning science. Granular personalization governed by learning styles including the influence of different sensory modes is integral to a more holistic AI-led learning framework.

Another area that has attracted significant attention is essay grading/grading of open text responses. (Janda et al., 2019). However, most of the solutions, if not all are black boxes and not considered viable or effective. By relying on word based statistical models, none of the ‘Automated Essay Evaluation’ systems capture the real meaning of the essay and its relevance to the related learning content. They suffer from other serious limitations – the need for large amount of data for training, the resulting bias, the lack of traceability and the lack of tractability. Since the model training is entirely dependent on the data set, they have been criticized for not being able to recognize gibberish essays [Kolowich, 2014] and essays that reflect divergent thinking. They are also not configurable for course specific essay assignments since the rubric may vary from course to course and there will not be enough data for training a statistical model in such cases. Unfortunately, this has resulted in the use of multiple choice assessments and the use of ‘peer grading’ for open text responses in online learning. Given the importance of ‘writing’ to reflect complete understanding, AIED needs to focus on automated evaluation of text responses with full understanding of the learning objectives/rubric and learning material.

An area of significant concern in developing and implementing AI applications is the potential misuse of AI, specifically the issue of ‘bias’ from reliance on data and the need for governance. Bias from data is a significant issue which inherently makes all statistical deep learning models unusable. Digital Promise [www.digitalpromise.org] has recently introduced a certification product which prioritizes racial equity by proactively identifying and minimizing racial bias in a product’s algorithms and design. Yang et al. (2019) propose that AI can evolve into human centered AI (HAI). In particular, they draw attention to how AI can inhibit the human condition and advocate for an in-depth dialog between technology and human-based researchers to improve understanding of HAI from various perspectives. Yang et. al. (2019) however do not recognize or provide any solutions to address the root causes of data driven bias. In our opinion, AIED needs to adopt explainable and tractable models of intelligence acquisition.

Some research studies (Pinkwart, 2016; Rummel et al., 2016; Schiff, 2020) have specifically focused on predicting a future for AIED. Pinkwart (2016) presents two possible futures – a utopian vision and a dystopian vision. Comparing the two extreme scenarios, the author summarizes seven challenges AIED might have to face in the future: cultural context, practical impact, privacy, interaction methods, collaboration at scale, effectiveness in multiple domains, and the need for greater importance for the role of AIED in educational technology. In our opinion, all seven are valid issues to be addressed if AIED is to succeed at scale. The author does not present any systemic solution to address the challenges.

Rummel et al. (2016) similarly present areas of research they consider important to avoid the pitfalls of a Dystopian view of the future of adaptive collaborative learning support (ACLS). Specifically, they advocate the need to work towards a comprehensive instructional framework building on educational theory in order to provide nuanced and flexible (i.e. intelligent) ACLS to collaborative learners. We agree with the basic premise of their recommendation – the need for nuanced and flexible personalized learning support based on educational theory and findings. In fact, the framework proposed in this paper reflects a similar underlying rationale.

Schiff (2020) reviews the status of AIED, specifically intelligent tutoring systems and educational agents, and attempts to provide development pathways for AIED in the future. The study notes in particular that MOOCs didn’t succeed widely because of a mistaken assumption of technological linearity and their inability to reproduce the in person teacher-student interaction. Overall, he points out that the previous round of educational technologies were not as successful because of quality gaps in their implementation. Schiff (2020) presents two competing futures for AIED – one rooted in efficiency through automation and the second rooted in robust differentiation at a student level. In our opinion, we do not see them as competing. AI technologies can enable us to achieve both efficiency and individualized learning.

In addition to the challenges identified by Pinkwart (2016), AIED also has to address broader challenges with AI – explainability, tractability, reliability of data, and full context awareness, in adopting a purely data driven approach to intelligence acquisition (Marcus, 2020; D’Amour et al., 2020; Srinivasan, 2017). No amount of data will ever be complete (Srinivasan, 2017) and the resulting models will contain whatever biases the data has. Explainability will be critical for many AIED applications,. A third challenge is tractability. The only way to improve models purely dependent on data is to add more data and hope it will improve the model. Revised models with additional data may change previous results which in some cases creates issues of reproducibility. Finally, in some areas like essay grading and natural language processing, current technologies do not have the ability to retain full context. Neural language models like BERT (Bidirectional Encoder Representation from Transformers) for example are word pattern based and do not understand the text despite an attempt to incorporate word level distributional semantics using embeddings, see e.g., Bender and Koller (2020).

Barring the issue of bias (Yang et. al., 2019), much of the AIED literature while extensive has not yet really recognized the implications of these fundamental challenges in the use and adoption of AI for learning. Some authors even suggest AIED research should adopt more sophisticated methods like deep neural network models. In our view, blind focus on methods without rationale on why and how that would benefit learning will not lead to advances in the use of AI for learning.

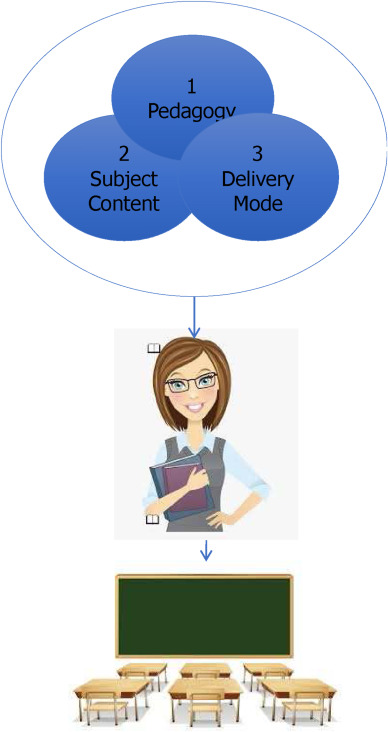

2.2. The changing role of the teacher

Since the days of the industrial revolution, the widely prevalent teaching/learning model used to center around a human teacher. As shown in Fig. 1, a good teacher had to be a subject matter expert and be current all the time, be an expert in the pedagogy relevant to that subject and had to be an expert at effectively imparting the subject using the desired pedagogy. In fact, many studies (e.g., Beteille & Evans, 2019) have found that high-quality teaching can have an outsized impact on learning. In the United States, Popova et al., [2016] found that the differences between a good and a bad teacher can equate to a full year of learning for a student.

Fig. 1. The conventional role of a teacher.

There are substantial challenges in scaling good teachers if you define them as shown in Fig. 1. Many elements of quality professional development of teachers that lead to impact at a small-scale can be very hard to preserve when implemented at large scale. Among the more commonly reported issues in scaling quality professional development of teachers have been cost, maintenance of quality, identification of high quality trainers, and contextualizing the training programs to reflect local context.

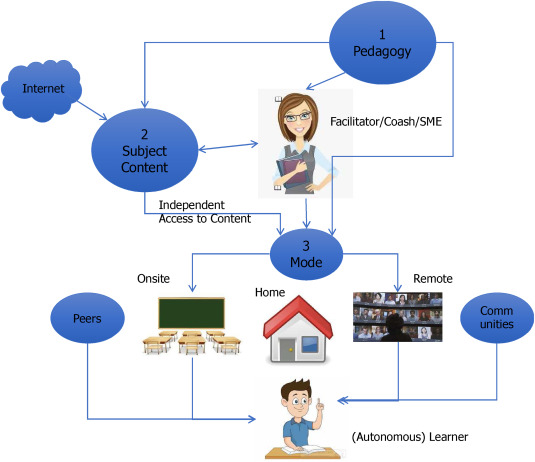

With the advent of the internet and other technological advances, learners have started to become more autonomous and the role of the teacher has been changing, especially in the online context [see Fig. 2]. Fig. 2 shows the disaggregation of the 3 dimensions we defined in Fig. 1 as characteristic of a good teacher. It reflects the impact of the new digital environment including the internet and digital technology. Content on every conceivable subject is growing exponentially on the internet. The teacher and the text book are no longer the only source of content. Teachers cannot possibly keep up with all the new content and knowledge by themselves. Further exacerbating the issue, today autonomous learners are likely to be aware of new content before teachers. AI can play a significant role in assisting teachers to stay abreast of new content and knowledge.

Fig. 2. The disaggregation of the Teacher’s role.

In terms of pedagogy, partly reflective of the digitally connected environment we are in today, in the flipped classroom model, learners complete assignments in the classroom and study the learning content at home through video lectures and on-screen tutorials. In the digitally connected world, there are multiple modes of delivery which all intersect and interact with one another. Learners can learn autonomously anywhere including home and the classroom, can interact with peers, mentors and parents, and belong to communities.

Today’s teacher is therefore, much more of a coach and facilitator. Of course, the teacher is also expected to be an expert in the subject matter but in a more foundational way where they are well versed in the foundational aspects of the subject.

Further, Covid19 forced all institutions to move online almost overnight irrespective of their prior beliefs about online education. Even though the immediate online shift has largely been a ‘lift and shift’ of materials and instruction, many teachers have been forced to use a variety of digital tools online which they were not familiar with before. As they experience these tools, we can envision they will want to modify pedagogy and materials to leverage the capabilities offered by these tools. Besides, the emerging consensus seems to be that the future is likely to be a blended model in most institutions. As a result, we can expect to see further changes in the role of the teacher in the future.

The AIED literature does not explicitly recognize the disaggregation of the teacher’s role, the emergence of the autonomous learner and the resultant implications for learning. The real opportunity before us is to use AI to scale this disaggregated model more effectively to improve learning, teaching and to do so in an equitable manner.

2.3. The role of parents as educators

The pandemic we are living through has forced parents to play a greater role in the education of their children at least in k-12 settings. With schools going remote at least partially, parents have had to teach and/or monitor their children’s learning at home. This has led to a huge need to support parents in their roles. In many cases, parents may not have had the necessary education themselves or certainly not trained pedagogically. We believe this will lead to an increased need for tutoring.

Is the greater role for parents temporary? Even as we return normal functioning of schools, we speculate that parental involvement may continue at an elevated level.

The research on the relationship between parent involvement and academic achievement is mixed. McNeal Jr. (2014) finds that while much research supports the claim that parent involvement leads to improved academic achievement (e.g. Burcu & Sungur, 2009; Coleman, 1991; Epstein, 1991; Henderson, 1991; Lee & Bowen, 2006; Patel, 2006), other research indicates that parent involvement is associated with lower levels of achievement (e.g. Brookover et al., 1979; Domina, 2005) or has no effect on achievement (e.g. Brookover et al., 1979; Domina, 2005; El Nokali et al., 2010). Additionally, parent involvement’s effect on academic achievement has been found to vary by the minority and/or social status of the student (e.g. Hill et al., 2004; Lee & Bowen, 2006), by gender (Muller, 1998), and by immigrant status (Kao, 2004). Finally, many studies find positive, negative, and/or no associations between parent involvement and academic achievement within the same study (e.g. Domina, 2005). Surprisingly, the contradictory findings are remarkably consistent and cut across grade level, measure of academic achievement, and time (spanning the middle 1970s to the late 2000s).

To our knowledge, there have been no studies on how AIED can play a role in enabling parents to be para educators. Intelligent learning assistants appropriately personalized and integrated with learning science at a granular level can make parents and teachers much more effective.

2.4. The neurobiology of learning

Over the last few decades, there has been considerable advancement in our understanding of how our brains construct and retain knowledge, i.e., how we learn. A systematic integration of these findings offer a tremendous opportunity to maximize the learning potential of every learner.

Our brains are believed to store knowledge in structures commonly referred to as schemas. It has also been found that our brains encode new information that fits prior knowledge more efficiently than when information is novel [van Kesteren et al., 2012]. Recent findings across neuroscience [St Jacques et al., 2013], psychology [Roediger and Mcdermott, 1995 and educational science [Castro Sotos et al., 2007] suggest that when a schema becomes too strong it can end up facilitating the formation of false memories and misconceptions. False memories and misconceptions obviously signify a major burden for learning.

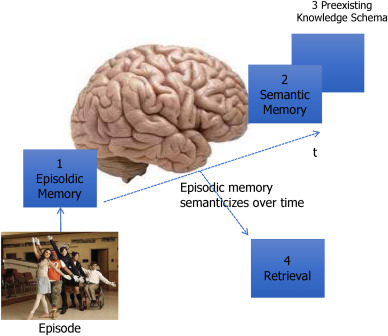

In terms of the process of knowledge construction, our brains have been found to follow a set of sequential processes to construct knowledge [Paller & Wagner, 2002]. First, new information becomes encoded into a memory. Right after encoding, a memory is usually episodically detailed, containing details about time, place and context. Over time, encoded memories are consolidated during sleep (and presumably also during rest). It is generally believed that consolidation semanticizes memories such that episodic details fade away and what remains is a more abstract version of the encoded memory. This is illustrated in Fig. 3.

Fig. 3. Knowledge construction in the brain.

Fig. 3 shows an episode getting captured initially at the time we witness it as episodic memory in rich detail. Over time, illustrated by the timeline t, this episodic detail semanticizes where we start losing the rich detail. At some point, we will barely remember the specifics except for some aspect of the event if it made a very deep impression on us. The process of episodic memory gradually being absorbed into long term memory depends a lot on our pre-existing knowledge schema in our brain. As we try to recall this episodic event over time, the degree of specificity of our recall will vary based on when along time we try our recall. This is illustrated by the arrow from the timeline t to ‘3 Recall’.

Generally, when memories are retrieved after consolidation, they have a different neural architecture than when they were first encoded [Dudai et al., 2015]. Moreover, they do not always contain the same details, showing that memories are altered over time beyond our conscious awareness and are not retrieved as a solid entity, but rather reconstructed depending on present cues. The act of retrieval is generally thought to alter a memory again, updating it with previously and currently learned or retrieved information. Memories are then reconsolidated into existing schemas, and in this way, schemas are thought to be continuously adjusted to optimize our understanding of the world around us and to allow prediction of future occurrences [Benoit et al., 2014].

Also, it is generally accepted that our brains have evolved to predict what will happen next. Our brain is continuously absorbing information from the environment to expand its world model and optimize its internal predictive model. Conversely, detailed episodic memories are also valuable as they can help to update the model when the world happens to change. In terms of learning, the learner’s pre-existing world model as it relates to the material being learned will have a huge influence on how effectively they learn. Active learning [Bonwell & Eison, 1991] is widely regarded as a seminal attempt to reflect this process in learning.

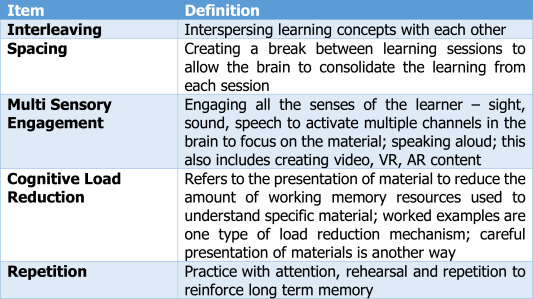

Based on the above review of how our brains structure knowledge and incorporate new information, it would appear that the way to optimize the learning potential for a learner will be to create the best possible memory with both semantic and episodic details. van Kesteren and Meeter [2020] summarize specific actions during encoding, consolidation and retrieval that can contribute to the best possible memory for a learner. Many of these are summarized in Fig. 4.

Fig. 4. Methods for best possible memory creation.

In a related vein, Ghazanfar and Schroeder (2006) also find that the brain has evolved to operate optimally in multisensory environments. If a classroom exercise stimulates more than one of the learner’s senses at any given time, multiple channels of the brain work to process the same information, and the brain devotes more cognitive resources to that information. In addition, the brain creates some interactivity between the two modes, essentially storing the information cross-referenced between the channels [Clark et al., 1991]. Thus, multisensory engagement may accelerate efficient knowledge construction in the brain.

Researchers at the University of Waterloo [Forrin & MacLeod, 2017] found that speaking text aloud helps to get words into long-term memory. The study determined that it is the dual action of speaking and hearing oneself that has the most beneficial impact on memory. Very related is the practice of rote memorization where a learner repeats long passages of text from memory. While its standalone impact on learning is not proven, its general beneficial impact in improving grey matter is well established. In a study of India’s Vedic Sanskrit pandits who train for years to orally memorize and exactly recite 3000-year old oral texts ranging from 40,000 to over 100,000 words, numerous regions in the brains of the pandits were dramatically larger than those of controls, with over 10 percent more grey matter across both cerebral hemispheres, and substantial increases in cortical thickness (“the famous Sanskrit Effect”) [Hartzwell, 2018]. Textual memorization is standard in India’s ancient learning methods and it is widely believed that exactly memorizing and reciting the ancient scripts, enhances both memory and thinking. There are other studies [Gao et al., 2019; Kamal et al., 2013] which report a significant deactivation of certain areas of the brain as a result of religious chanting indicating relaxation and a meditative impact.

2.5. The constructivist theory of education

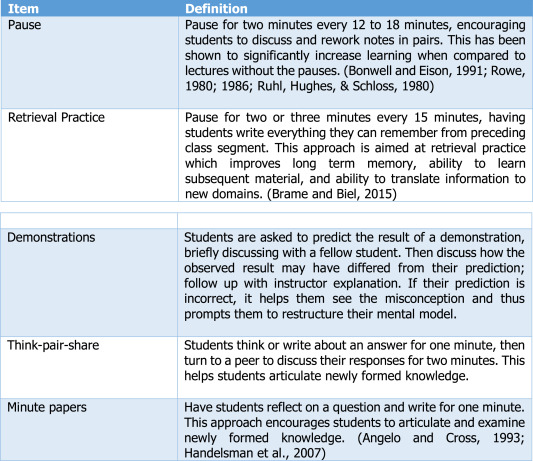

Educational psychologists have also attempted to recognize how cognitive processes work and have advocated a theory of learning called the Constructivist Theory of Education [Pass, 2004, p. 74]. It refers to the idea that learners construct knowledge for themselves and such knowledge construction is dependent on what their existing knowledge schema is. Such thinking has led to the idea of ‘Active Learning’. Bonwell and Eison [1991] defined active learning as “instructional activities involving students in doing things and thinking about what they are doing”. Approaches that promote active learning require that students do something—read, discuss, write—that requires higher-order thinking. They also tend to place some emphasis on students’ explorations of their own attitudes and values. Approaches that promote active learning often explicitly ask students to make connections between new information and their current mental models, extending their understanding. Fig. 6 lists various techniques to achieve ‘Active Learning’ in the physical class room.

Techniques to replace some lecturing as part of active learning have also been suggested including case-based learning, concept maps [Novak & Canas, 2008], student-generated test questions [Angelo & Cross, 1993]. There are also other active learning pedagogies many of which have strong following including team-based learning, process-oriented guided inquiry learning, peer-led team learning, flipped classroom model and problem-based learning.

Several of the techniques listed in Fig. 5 signal that writing is the most effective way to reflect understanding. It is a very critical part of all three phases of knowledge construction – encoding, consolidation and retrieval. In fact, complete understanding can only be assessed based on essay and open text responses. Yet, in today’s instructional settings, few courses incorporate open text assessments extensively; open text questions have been replaced with multiple choice questions. This is because teachers and institutions do not have the time to assess open text responses. Clearly, AI can play a significant role in this context.

Fig. 5. Some active learning techniques.

Many of the Active Learning techniques are variations on findings by neuroscience researchers.

2.6. Literature review summary

We have reviewed the literature in 4 areas that we believe are highly relevant in the context of a holistic, integrated framework for a preferred AI-led future for learning. We summarize our overall findings here.

The AIED research reviewed in 2.1 has focused on applications of AI to specific issues in learning and education. We have presented overall findings of many of these studies as reported by several meta studies. More important, we have highlighted throughout the review the limitations and challenges of AI applications in education. Some authors have proposed taxonomies for organizing AIED research meaningfully and a few have proposed their vision of a future for educational technology. Research on future pathways for AIED is partial or high level without any specific actionable technology solution blueprint.

In section 2.2, research related to the changing role of teachers validates their evolution as coach and facilitator but prior work in that field has not really integrated how AI technology can facilitate their evolving role and scale quality education at scale. Further, there does not appear to be any specific research related to how AI technology can address the knowledge gap created by the continuous flow of new information.

In section 2.3, we summarize the literature related to parental involvement in student learning. Contrary to popular belief, the literature does not seem uniformly conclusive about the beneficial impact of parental involvement. We did not find any direct study on how AI technology can help parents be effective and potentially even obviate the need for parents to play the role of teachers especially if they are not proficient with the subject.

Finally, section 2.4 reviews literature on knowledge construction and learning science. These have not been explicitly reflected in the studies envisioning a future evolution of AIED or the AIED taxonomies proposed in the literature. While there are many successful instances in the real world where these findings have been used, it is largely left to the teacher to incorporate them as they see fit. There has been no systematic effort at the institutional level to integrate learning science techniques into their curricula. One reason for this could be that it is difficult and cumbersome to implement these techniques manually. AI holds out a significant possibility to address this challenge.

In summary, while prior AIED research has made many valuable contributions, we believe there is a need for conceptualizing a holistic actionable framework reflecting the role of AI in learning.

3. A preferred future for AI-Enabled learning

In this section, we present a preferred future for the role AI can play in achieving effective learning at scale equitably. Our preferred future is presented in the form of a comprehensive solution framework.

The framework approaches learning holistically and attempts to integrate foundational learning, learning science and personalization. In the process, it also addresses the gaps in the AIED literature identified in the previous section. The framework comprises several components each of which can scale specific aspects of learning irrespective of the subject. It comprises foundational components for learning combined with learning science optimized functional content for any topic or subject or field. The framework brings together all the individual components through a Learning Orchestrator personalized to the individual learner. Together, it provides a robust foundation that can act as a framework for any AIED application. The framework is also equally relevant to other stakeholders in the learning process – teachers, parents, peers, mentors, educational institution and other learning environments such as corporations interested in re-skilling or up-skilling employees.

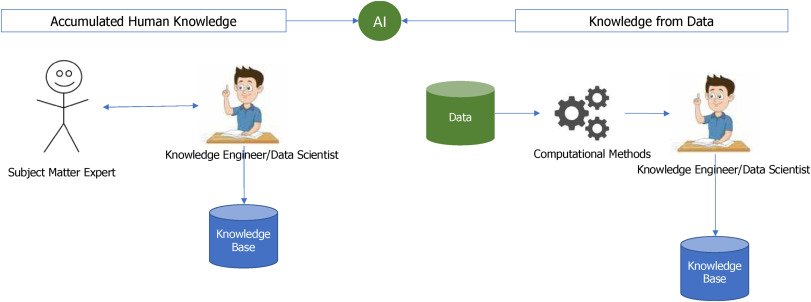

For the purposes of the framework, we define AI to be synonymous with intelligent machines which can perform tasks that humans do. Intelligence acquisition for these machines can be from data, from experts or from both. For most real world usage, intelligence acquisition will likely be a combination of expert intelligence and intelligence acquired from data as shown in Fig. 6. We refer to this as ‘computational intelligence’. Hence, we define AIED applications as those which incorporate computational intelligence. Further, we propose that in the framework all AI models be explainable except in cases where explainability is not needed.

Fig. 6. Machine intelligence acquisition.

Explainable AI is where the specifics of the AI model, like its features, is clearly visible and understandable by subject matter experts. Deep learning using neural networks produce black boxes which are not currently explainable. Methods like random forest, CART, natural language processing technologies like Gyan [www.gyanAI.com] which do not rely on statistical language models e.g., BERT and GPT3 (Generative Pre-trained Transfomer 3), are explainable. Deep neural networks may be acceptable in areas where it is not essential for the user or modeler to understand model specifics or validate causality.

We also believe the framework must reduce reliance on the need for large amounts of data by increasing reliance on expert knowledge where available. A hybrid approach to knowledge acquisition can reduce the dependence on large amounts of data and minimize data specific issues like bias. Tractability is another preferred aspect. If the only way to improve the model is to add more data without understanding whether it will improve or worsen the outcomes, it is less preferable. Again, if we relied on expert knowledge for a priori feature engineering and used explainable methods, the resulting models will be more tractable.

The framework components are briefly described below:

3.1. Reading assistant

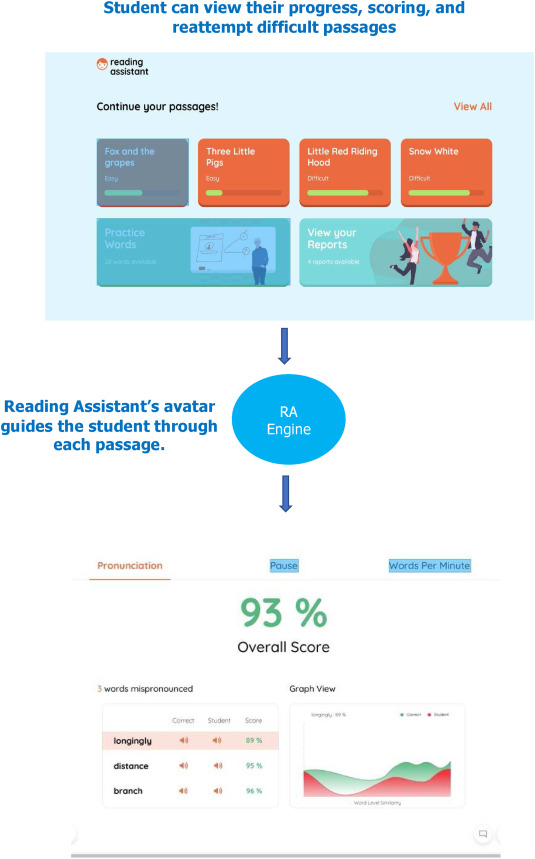

The Reading Assistant is a core component applicable to all content regardless of grade or level from elementary school to higher ed to adult learners. It enables the learner to read aloud any content. The Reading Assistant helps both learning to read and reading to learn. Depending on the level of the learner, the reading assistant can assist in improving pronunciation, reading fluency and in higher grades and working learners, it can help with faster knowledge construction as described in the previous section. The Reading Assistant can be unassisted or used in a guided mode.

Fig. 7 displays a screen shot from Reading Assistant which is currently being launched in the k-12 schools in the U.S. The screen shows assessments of the early learner’s reading – pronunciation, reading fluency. Learners can practice on their own. Their reading is compared algorithmically against a gold standard version. Discrepancies are analyzed presented at the syllable and phoneme level. Learners in lower grades can use this feedback to improve their reading skills on their own. An adaptive personalizer presents appropriate practice passages or words based on an analysis of areas where the learner is having difficulty consistently.

Fig. 7. Reading assistant.

Another set of learners who will find this level of functionality valuable are non-native (English) learners. For learners in higher grades, the Reading Assistant serves as a way of accelerating long-term memory formation.

3.2. Comprehension assistant

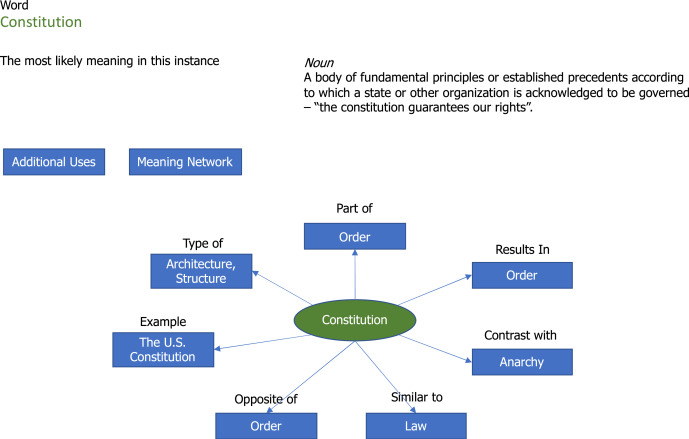

The Comprehension Assistant (Fig. 8) is another foundational component applicable to all content regardless of grade. The goal of this component is to accelerate understanding a word or concept, in context. The Comprehension Assistant presents a ‘Meaning Graph’, which is a network of concepts related to the word along with how they are related. We believe presenting such a network of related concepts enables stronger memory formation.

Fig. 8. Comprehension assistant – learning graph.

The Comprehension Assistant also allows the learner to become familiar with all the senses in which the word can be used and their associated learning graphs or semantic network. Activity based learning methods and assessments can be based off of the full range of the word’s semantic networks.

3.3. Cognitive learning optimizer

We envision the AI-enabled framework will integrate relevant learning science algorithms seamlessly into the learning process/curricula. We can categorize this optimizer into three buckets:

3.3.1. Reorganize learning content & curriculum

The framework should enable effective and systematic integration of the findings summarized in Fig. 4 with course content. Specifically, the curriculum should be designed to incorporate aspects like interleaving, spacing, repetition, multi-sensory engagement, active participation and worked examples. Without AI technology, this can be extremely cumbersome and difficult to achieve. AI algorithms can assist significantly in the reconstruction of the learning content by automatically suggesting strategies for interleaving, spacing, and repetition.

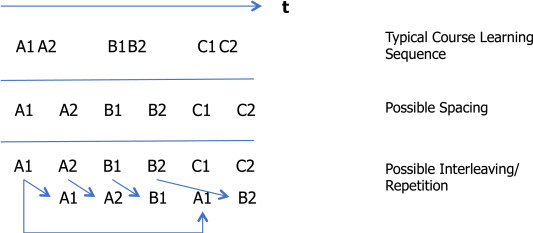

There can be many different spacing, interleaving and practice patterns for a learning pathway or course. Fig. 9 is illustrative. We envision a one-time effort to create ‘learning optimized’ version of existing curriculum including materials and then an ongoing effort to integrate new material for existing courses and new courses.

Fig. 9. Structure learning content to maximize memory and understanding.

In a pioneering effort, Digital Promise has created Learner Models rooted in research-based factors and strategies that honor the whole child and adult learners to help instructors and edtech product developers reach each learner in every learning environment (https://digitalpromise.org/initiative/learner-variability-project/about/). Their models can be a starting point in the creation of automated AI algorithms to optimize learning content. Of course, the framework needs to go beyond such learning models and adapt such models for each individual learner.

Another interesting area of future development is the possible identification of the preferred learning mode for an individual learner by analyzing signals in brain waves through brain computer interfaces (BCI). Currently, BCI relies mostly on electroencephalography (EEG) for monitoring the electrical activity of the brain. Using AI and non-invasive sensors in wearable devices such as headbands and earbuds, it is now becoming possible to analyze and extract relevant brain patterns (Rashid et al., 2020). Analysis of brain signals can be used for detecting engagement levels and whether someone is focused or distracted. It can be used to alert the onset of fatigue. Neurable [www.neurable.com], a boston based startup, has developed BCI technology to measure brain activity to generate simple, real time insights.

The Personalized Learning Orchestrator described later should incorporate all the above aspects to maximize the learning potential of the individual learner.

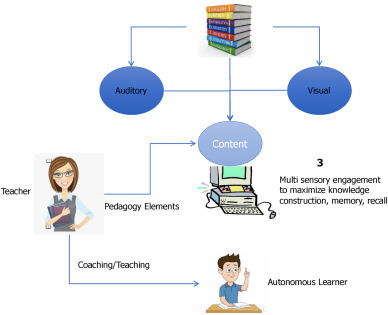

3.3.2. Multi-sensory engagement

Multi sensory engagement implies the content has to be ideally enabled on multiple mediums – auditory, visual and text (Fig. 10). This will be extremely cumbersome to achieve without automation. AI algorithms can help create the same content in different sensory modes. Auditory can be in the form of reading aloud in the case of text [Schramma, 2016], visual can be in the form of learning graphs, and simulations and videos as described in Mayer [2010]. This successful large scale deployment reported by Srinivasan and Murthy (2021) indicates that multisensory technology can be beneficial even in the face of poor classroom infrastructure, and short amounts of time spent using the software.

Fig. 10. Multi-sensory engagement.

Based on 20 years of experiments with his colleagues, Mayer [2010] presents evidence in the context of medical education that the retention and transfer test performance of people who learn from a lesson with multi-sensory engagement was consistently superior to an identical lesson learnt without multisensory engagement.

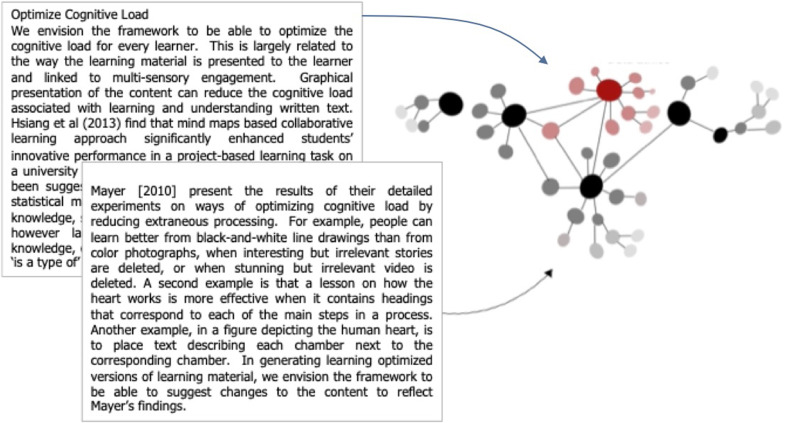

3.3.3. Optimize cognitive load

We envision the framework to be able to optimize the cognitive load for every learner. This is largely related to the way the learning material is presented to the learner and linked to multi-sensory engagement. Graphical presentation of the content can reduce the cognitive load associated with learning and understanding written text. Chih-Hsiang et al. (2013) find that mind maps based collaborative learning approach significantly enhanced students innovative performance in a project-based learning task on a university management course. Knowledge graphs have been suggested as a way of representing knowledge for statistical machine learning; for a review of this body of knowledge, see Nickel et al. (2016). Knowledge graphs are however largely limited to definitional extensions of knowledge, encapsulating relationships like ‘also known as’, ‘is a type of’ etc.

Mayer [2010] present the results of their detailed experiments on ways of optimizing cognitive load by reducing extraneous processing. For example, people can learn better from black-and-white line drawings than from color photographs, when interesting but irrelevant stories are deleted, or when stunning but irrelevant video is deleted. A second example is that a lesson on how the heart works is more effective when it contains headings that correspond to each of the main steps in a process. Another example, in a figure depicting the human heart, is to place text describing each chamber next to the corresponding chamber. In generating learning optimized versions of learning material, we envision the framework to be able to suggest changes to the content to reflect Mayer’s findings.

GyanAI [www.gyanAI.com] has created a graphical representation of content for the purpose of learning, ‘Learning Graphs’ (Fig. 11). We illustrated a learning graph earlier in the context of the Comprehension Assistant. Learning Graphs contain the essence of the document in a graphical form in the form of a network of concepts and concept relationships. It is presented in layers to promote easy understanding. The central theme of the document is displayed first along with other major concepts in the document. The user can then expand the graph along the path they want to learn or explore. For e.g., they allow the learner to traverse a book in a non-linear fashion if desired. The idea is that some learners are comfortable with reading long written text and others prefer visualization of the ideas in the text. Learning Graphs are a variation of Concept or Knowledge Graphs with a focus on helping the learner understand and comprehend chunks of material at a time driven by learner’s interest.

Fig. 11. Gyan learning graphs.

Learning graphs can also be aggregated across multiple documents to create a synthesized learning graph. Fig. 11 illustrates a synthesized learning graph across three documents. The combined learning graph can enable a reader to understand the contents of multiple documents without having to read through redundant concepts in each document.

3.4. Automated open text assessment/assisted writing

Automated grading of essays/open text responses and assisted writing are more or less two sides of the same coin. Effective writing is not only about communicating effectively to an audience but also about cementing memory formation and knowledge in the writer’s brain. Natural language processing technologies (BERT, GPT3) provide a less than full context ability to generate text. We see them deployed in many email and short text writing environments where they guess the next word or a reply. But as Marcus (2020) points out they can also generate nonsensical text since they do not really understand the full context and lack the ability to do what we might refer to as ‘common sense inferencing’. Besides these language models, there are many grammar checkers, which attempt to identify grammatical errors in one’s writing and in many cases, suggest corrections. A more complete, fully contextual writing assistant will be able to guide the writer with the help of ‘Learning Graphs’ for any concept reflecting global knowledge about that concept and commonly accepted rhetorical rules about effective writing. We envision such a Writing Assistant to be part of the framework.

The same component can be used for open text assessment. Formative and ongoing assessments are an integral part of assessing the efficacy of the educational technology, pedagogy and content. Nowadays many courses/curricula rely mostly on multiple choice questions and minimize open text questions. However, complete understanding of a concept or learning unit is only possible through open text responses. It is widely recognized that the inability to do open text assessment at scale is holding back the growth of online learning [e.g., Haldi, 2020]. It is also well understood that human grading of essays is fraught with inconsistencies and other human limitations such as recency bias. Different graders of the same essay are quite likely to grade it differently. On top of the limitations of human grading, online learning has adopted ‘peer grading’.

The open text assessment component should be configurable easily at a course or learning unit level. It should also be able to analyze the response in the context of the complete learning content the learner was exposed to. It should be completely explainable and not need large amounts of data for training.

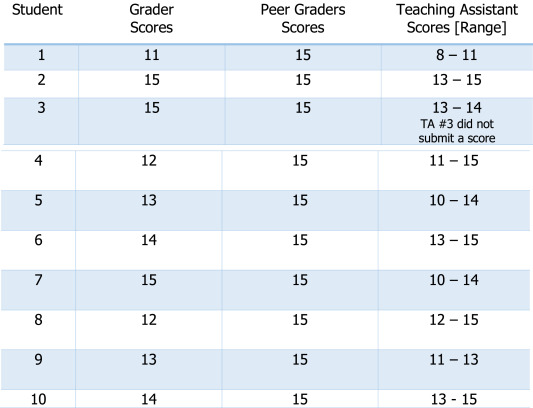

We report results from an explainable, course-configurable Essay Grader at a prominent, globally respected higher educational institution which currently uses peer grading in its online courses for open text response assessment. The Essay Grader was tested on a sample question from an engineering course. The Grader was configured to reflect the professor’s course-level rubric for grading, and considered all the reading materials relevant for the assignment. Fig. 12 displays a comparison of the Grader’s automated score, peer grades, and grading by 3 teaching assistants (TAs) who graded the same essays manually.

Fig. 12. The explainable essay grader example (Max score = 15).

As we can see, the peer graded scores were perfect for all 10 students. The TA scores had a wide dispersion for the same essay. The automated grader had a tighter dispersion of scores for the 10 students. For each of the automated assessments, a detailed reasoning report was provided to the professor/institution explaining how the Grader arrived at the final score/grade. In all the cases, the professor and TAs agreed with the Grader’s assessment. This grader can also be used as a Writing Assistant.

3.5. Personalized learning orchestrator

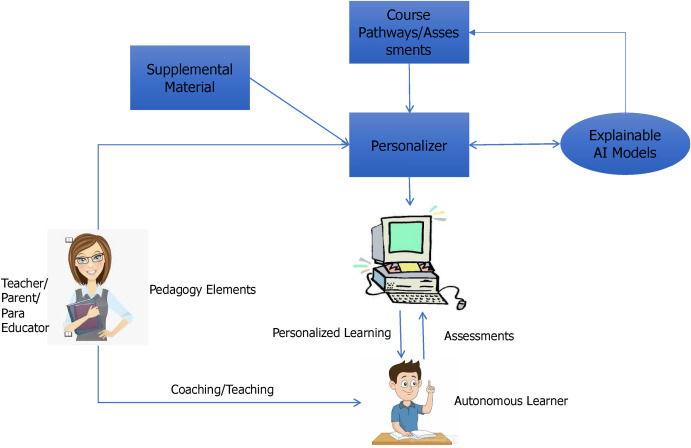

As reviewed earlier, there has been substantial AIED research on the benefits of personalization. An adaptive personalization component is integral to any AIED framework. It should identify the learning preferences of every learner over time and attempt to personalize their learning experience and optimize their learning potential [Fig. 13]. The Personalized Learning Orchestrator includes a personalizer and an orchestrator with the former continually personalizing the learning content and the latter performing the orchestration with the learner. The component will also integrate multisensory engagement and a cognitive load optimizer. This can be done by creating an initial set of granular learning pathways for the course or learning unit, identifying the combination of learning science mechanisms that work best for a learner and being capable of multisensory engagement with the learner including contextually rich interactive conversation. The Personalizer should also be capable of accommodating personalization at a very granular level in the learning unit, say at an individual concept level.

Fig. 13. Personalizer.

We envision the creation of a meta taxonomy of learning types over time where each atomic learning item can be categorized into a more abstract learning type. This might allow us to understand learning preferences at a more abstract learning type level.

At a more macro level, the Personalizer can also use machine learning to see if there are systemic reasons for success in specific atomic learning items or in abstract learning types across a learning cohort like a class, school or a collection of schools. The Personalizer will track where a learner is having difficulty and provide an appropriate intervention. The intervention will require the framework to assess if the difficulty is due to lack of prerequisite knowledge and/or difficulty understanding the material. For the former, the framework should allow for the learner to access prerequisite materials and become more proficient where possible. For the latter, the framework should present additional examples, practice problems to provide the learner an opportunity for repetition. It can also incorporate these findings in the interleaving and spacing strategy for the remainder of the course. The course pathway becomes personalized for the learner with timely remedial interventions.

3.6. Parents/para educators

Parent involvement has been a major cornerstone of several immediate past Presidents educational initiatives – President Obama’s “Race to the Top” initiative, former President Bush’s No Child Left Behind initiative, former President Clinton’s 1996 Elementary and Secondary Act, and former President Reagan’s Goals 2000: Educate America Act, and is actively promoted in national programs and initiatives (e.g. Head Start). However, previous research shows inconsistent relationships between parent involvement and academic achievement.

While we can debate the impact of parental involvement on children’s education, the pandemic has forced increased involvement of parents. This has resulted in a need to train parents on how to be effective in teaching and monitoring learning at home. The AI-enabled framework presented in the next section eases parental burden and enables effective learning even in a completely remote learning environment. The framework envisions a personalized learning orchestrator which can work in an interactive fashion with the learner. Parents do not have to take on the role of a teacher unless they want to. The framework also envisions peers and learning communities as part of the learning network. This can also ease the burden on parents.

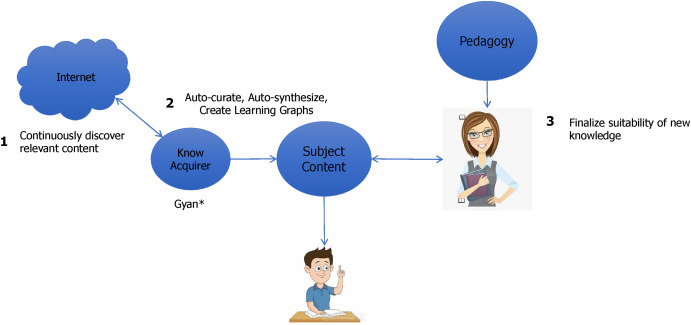

3.7. Continuous knowledge acquirer

As pointed out earlier, knowledge is continuously growing on the internet on every conceivable topic. While foundational concepts and facts may not change in most cases, it will be important to have access to new knowledge on a continuous basis. This will serve three purposes. First, additional material may be useful to enhance a learner’s understanding of the topic/concept as they are going through a learning program. Second, this is a way for the learner to keep learning continuously. Third, a teacher needs an easy way to be up to date with new developments in their field.

The framework envisions a continuous knowledge acquirer which would discover new relevant knowledge dynamically and present it to the learner/teacher (Fig. 14). It will access relevant content from the internet [or other sources] on any topic, auto-curate, auto-synthesize continuously on its own. The knowledge discovery feature can be applied to any concept or topic of interest. Depending on context, the newly discovered content can be staged for the teacher/author to review before presenting to the learner.

Fig. 14. Continuous knowledge acquisition.

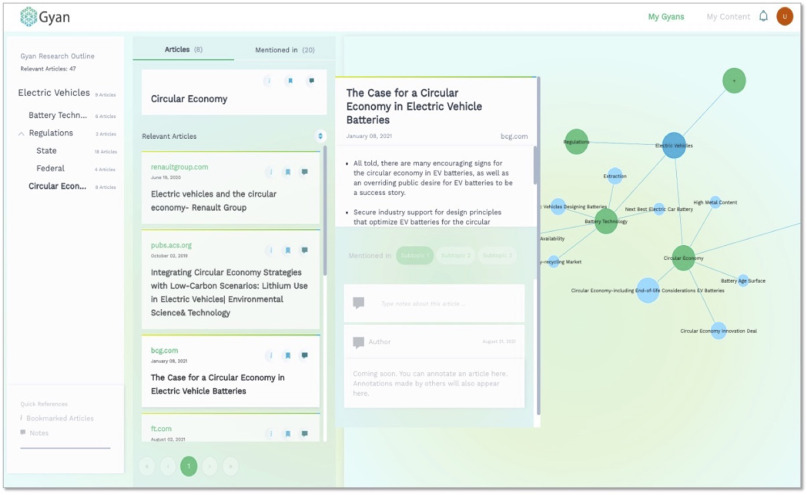

GyanAI [www.gyanai.com] has developed the first on-demand continuous knowledge acquisition engine, Gyan which can provide auto-curated, auto-synthesized knowledge on any topic any time. Fig. 15 shows a snapshot from Gyan has automatically found the relevant articles for the user’s topic of interest, created an appropriate table of contents, categorized relevant articles and also created a synthesized learning graph on the right.

Fig. 15. Continuous knowledge acquisition.

Gyan can continuously keep this knowledge updated for any or all concepts in the learning graph.

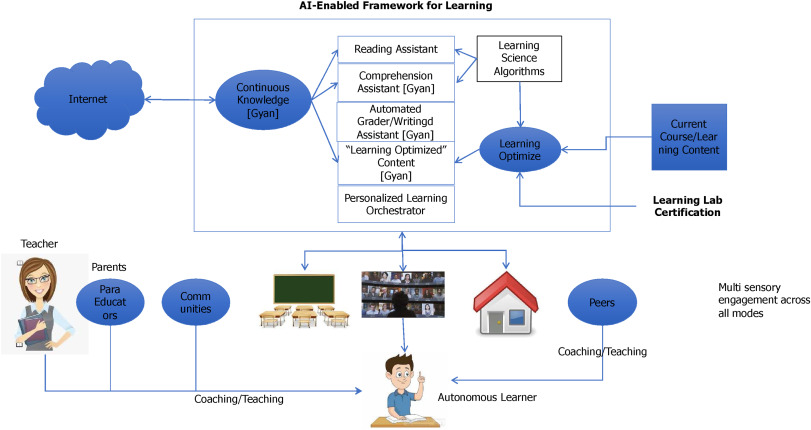

3.8. The complete framework

Fig. 16 combines all the components described in this section into a complete framework. We envision the following components:

Fig. 16. An AI-Enabled framework for learning.

Reading Assistant – enables read aloud of any content with the ability for the learner to control their reading.

Comprehension Assistant – facilitates understanding of specific concepts with learning graphs.

Automated Grader – assesses open text response in a traceable manner providing the assessor/learner complete visibility into assessment reasoning. The grader can be configured to course/assessment specific rubric.

Dynamic Learning Optimized Content – integrates new content/knowledge with existing course content/curriculum using learning science algorithms and the human teacher’s input.

Learning Science Algorithms/Learning Optimizer – converts content to be optimized for learning reflecting learning science.

Learning Lab Certification – educational institutions may need to create a common competency to ensure that all their offerings are properly and consistently optimized for learning.

Personalized Learning Orchestrator – orchestrates the learner’s learning according to the learning objectives of the course leveraging the other components and personalizes to the learner using machine learning based on the learner’s preferences, performance as the course progresses.

Continuous Knowledge – acquires relevant knowledge continuously on any set of topics from any collection of sources, auto synthesizes with existing content in a variety of forms.

There are other important actors including peers and learning communities whose role can also be facilitated by the framework appropriately.

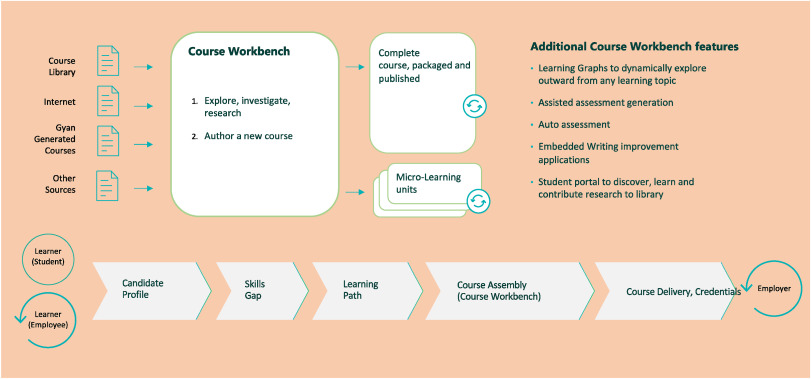

4. Educational institution centric view

The previous section has presented our vision of an AI-enabled future for learning from a student centric point of view. An equivalent framework can also be envisioned for the educational institution and for a corporation interested in up-skilling or re-skilling its employees or in continuous talent optimization. Fig. 17 presents a dynamic learning framework from an educational institution/corporation perspective. There are two aspects to note here. The first is the need for a dynamic course authoring framework which can find relevant content from existing content repositories and the public internet. We envision that in the future, institutions will be substantially driven by a job market which is increasingly competency based. The institution will need to rapidly design learning content in response to such market demand at an industry or even a company specific level. The framework envisions the use of AI in rapidly designing the required learning content.

Fig. 17. Institution centric view.

The second aspect is that of re-skilling or up-skilling or merely life long learning. Here a learner needs to qualify for a specific job or role. Fig. 17 shows how the framework can support automated identification of the learner’s current skill from their resume and the normalization of such extracted skills with reference to a skills taxonomy. It also imagines the determination of personalized learning pathways for the learner to achieve the competencies needed. Not all learners are at the same place. The personalized learning pathways are determined by identifying the learner’s skills from their resume, and matching it with the skills required for the learning content and identifying a specific learning pathway.

The same framework is applicable for the corporation aiming to optimize its talent pool or an individual wanting to re-skill or up-skill.

5. Summary

In an important contribution, Hwang (2020) outlines a vision and challenges for AIED in the context of setting a preferred research agenda. The study proposes categorization of AIED studies into four roles: intelligent tutor, intelligent tutee, intelligent learning tool or partner and policy-making adviser. It will be useful to compare the framework proposed in this paper with Hwang (2020).

First, the primary purpose of our preferred future framework is not for a research agenda even though it could be used for that purpose. Our framework is actionable and meant to guide AIED application efforts. It can be implemented in part or whole by one or more application builders. Second, the framework in this paper directly maps to three of the four roles described by Hwang (2020): intelligent tutor, intelligent tutee and intelligent learning tool. Further, it can be easily extended to envelop the policy making advisor role but we leave that for the future. The preferred future presented in the paper reflects all the ten research areas identified by Hwang (2020). Areas or dimensions in our preferred future framework which have not been explicitly reflected in Hwang (2020) include the use of AI for dynamic course authoring, continuous knowledge acquisition, optimizing learning content to reflect learning science, optimizing cognitive load and the intelligent creation and management of learning communities. We see the framework proposed in this paper as a superset of the vision outlined in Hwang (2020) albeit our primary objective is different.

The preferred future for AIED proposed in this paper addresses the challenges with AI identified earlier. First, it is based on an inclusive definition of machine intelligence. We specify machine intelligence to be a combination of expert knowledge and knowledge acquired from data. This definition addresses issues relating to bias and the need for large amounts of data. If an AI modeling effort starts with expert knowledge which is an accumulation of many years of experience, it cuts down the need to discover all intelligence from data and will reduce the amount of data needed. It will also dramatically reduce the bias in the AI model.

Second, we specify that all AI models used must be explainable. This addresses the issue of black box recommendations and findings which will face significant user adoption issues. There may be areas where explainable models may not be needed and in these cases, the modelers are obviously free to choose any approach that they find effective. We must stress of course that the requirement for explainability does not imply a compromise for model performance. In our experience, we have always been able to build explainable models that performed as well or better compared to black box approaches.

In the case of natural language processing, e.g., open text assessment, our framework envisions an explainable natural language understanding platform which can retain full context and truly attempt to understand the discourse. We briefly reported the results from implementing an explainable open text grader which can be configured at a course level and can also consider relevant learning content in assessing responses.

Third, the framework needs to be implemented in phases based on the sophistication of the target environment. Srinivasan and Murthy (2021) describe a successful phased implementation where they introduced a multi-sensory intervention first and after its large scale adoption, they have started a more sophisticated version of their intervention for personalized learning.

Fourth, the intervention must be adapted to fit in the local environment as suggested in the literature. Language, culture, practices in the local environment must be considered in the implementation plan. Finally, the framework can enable as much privacy as desired. Sensitive data like learner performance and learning style, can be protected where needed.

We have also illustrated implementations of several framework components individually and in combination – reading assistant, comprehension assistant, multisensory engagement, cognitive load optimization, continuous knowledge discovery and dynamic content creation.

6. Summary

AIED holds enormous promise to address chronic challenges of equitable learning at scale. In this paper, we have proposed a preferred future for AI in Education with the twin objectives of scaling quality education affordably and optimizing the learning potential of individual learners. Various components in the framework can scale different parts of the teaching/learning model while allowing the human teacher to continue to evolve as a facilitator/coach in addition to being a subject matter expert. The framework strongly advocates the use of explainable AI, the integration of scientific findings on knowledge formation and learning, and the appropriate integration of humans to overcome various previously observed challenges. The framework applies universally to all learners including life long learners. The framework was also shown to extend to include the perspective of an educational institution or a corporation.

Declaration of competing interest

None.

References

Angelo and Cross, 1993 T.A. Angelo, K.P. Cross

Classroom assessment techniques: A handbook for college teachers

Jossey-Bass, San Francisco (1993)

Google Scholar

ASER, 2019 Annual State of Education Report, India

http://www.asercentre.org//p/359.html (2019)

Google Scholar

Baker and Smith, 2019 T. Baker, L. Smith

Educ-AI-tion rebooted? Exploring the future of artificial intelligence in schools and colleges

Nesta Foundation (2019)

Retrieved from

https://media.nesta.org.uk/documents/Future_of_AI_and_education_v5_WEB.pdf (2019) Google Scholar

Google Scholar

Bender and Koller, 2020 E.M. Bender, A. Koller

Climbing towards NLU: On meaning, form, and understanding in the age of data

Proceedings of the 58th annual meeting of the association for computational linguistics (2020), pp. 5185-5198

July 5 – 10, 2020

View at publisher Crossref View in Scopus Google Scholar

Benoit et al., 2014 R.G. Benoit, K.K. Szpunar, D.L. Schacter

Ventromedial prefrontal cortex supports affective future simulation by integrating distributed knowledge

Proceedings of the National Academy of Sciences of the United States of America, 111 (2014), pp. 16550-16555

2014

View at publisher Crossref View in Scopus Google Scholar

Beteille and Evans, 2019 T. Beteille, D.K. Evans

Successful teachers, successful students: Recruiting and supporting the world’s most crucial profession. World bank policy approach to teachers

http://documents1.worldbank.org/curated/en/235831548858735497/Successful-Teachers-Successful-Students-Recruiting-and-Supporting-Society-s-Most-Crucial-Profession.pdf

Google Scholar

Bonwell and Eison, 1991 C.C. Bonwell, J.A. Eison

Active learning: Creating excitement in the classroom. ASH#-ERIC higher education report No. 1

The George Washington University, School of Education and Human Development, Washington, D.C. (1991)

Google Scholar

Brookover, 1979 W.C. Brookover, et al.

School social systems and student achievement: Schools can make A difference

Praeger, NY (1979)

Google Scholar

Burcu and Sungur, 2009 S. Burcu, S. Sungur

Parental influences on students’ self-concept, task value beliefs, and achievement in science

Spanish Journal of Psychology, 12 (2009), pp. 106-117

Google Scholar

Castro Sotos et al., 2007 A.E. Castro Sotos, et al.

Students’ misconceptions of statistical inference: A review of the empirical evidence from research on statistics education

Educational Research Review, 2 (2007), pp. 98-113

2007

View PDF View article View in Scopus Google Scholar

Central Square Foundation, 2020 Central Square Foundation

State of the sector report on private schools in India

(2020)

Google Scholar

Chatterjee and Bhattacharjee, 2020 S. Chatterjee, K.K. Bhattacharjee

Adoption of artificial intelligence in higher education: A quantitative analysis using structural equation modelling

(in press) in

Education and Information Technologies (2020)

2020

Google Scholar

Chen et al., 2020 H. Chen, et al.

Application and theory gaps during the rise of artificial intelligence in education, computers & education, Vol. 1, Artificial Intelligence (2020), Article 100002

2020

View PDF View article View in Scopus Google Scholar

Chen et al., 2021 H. Chen, et al.

Twenty years of personalized language learning: Topic modeling and knowledge mapping

Educational Technology & Society, 24 (1) (2021), pp. 205-222

Google Scholar

Chih-Hsiang et al., 2013 W. Chih-Hsiang, et al.

A mindtool-based collaborative learning approach to enhancing students’ innovative performance in management courses

Australasian Journal of Educational Technology, 29 (1) (2013)

Google Scholar

Clark et al., 1991 J. Clark, et al.

Dual coding theory and education

Educational Psychology Review, 3 (1991), pp. 149-210

View in Scopus Google Scholar

CNBC, 2020 CNBC

https://www.cnbc.com/2020/02/12/bill-and-melinda-gates-say-education-philanthropy-is-not-having-impact.html (2020)

Google Scholar

Coleman, 1991 J. Coleman

Parent involvement in education. Policy perspective. Office of educational research and improvement

U.S. Department of Education, Washington, D.C. (1991)

Google Scholar

D’Amour, 2020 D’Amour, et al.

Underspecification presents challenges for credibility in modern machine learning

https://arxiv.org/abs/2011.03395 (2020)

arXiv.org

Google Scholar

Domina, 2005 T. Domina

Leveling the home advantage: Assessing the effectiveness of parent involvement in elementary school

Sociology of Education, 78 (2005), pp. 233-249

View at publisher Crossref View in Scopus Google Scholar

Dudai et al., 2015 Y. Dudai, A. Karni, J. Born

The consolidation and transformation of memory

Neuron, 88 (2015), pp. 20-32

View PDF View article View in Scopus Google Scholar

El Nokali et al., 2010 N. El Nokali, N. Bachman, E. Votruba-Drzal

Parent involvement and children’s academic and social development in elementary school

Child Development, 81 (3) (2010), pp. 988-1005

View at publisher Crossref View in Scopus Google Scholar

Epstein, 1991 J. Epstein

Effects on student achievement of teachers’ practices of parent involvement

Advances in Reading, 5 (1991), pp. 261-276

Google Scholar

Forrin and MacLeod, 2017 N.D. Forrin, C.M. MacLeod

This time it’s personal: The memory benefit of hearing oneself, in memory

(2017), 10.1080/09658211.2017.1383434

October 2 2017

View at publisher Google Scholar

Gao and Leung, 2019 J. Gao, Leung, et al.

The neurophysiological correlates of religious chanting

Scientific Reports, 9 (2019), p. 4262, 10.1038/s41598-019-40200-w

2019

View at publisher View in Scopus Google Scholar

Ghazanfar and Schroeder, 2006 A.A. Ghazanfar, C.E. Schroeder

Is neocortex essentially multisensory?

Trends in Cognitive Sciences, 10 (6) (2006), pp. 278-285

View PDF View article View in Scopus Google Scholar

Haldi, 2020 T.C. Haldi

Online learning’s grading challenge

Blog post (2020)

https://intelligentmachineslab.com/online-learnings-grading-challenge/

Accessed 18th Aug 2020

Google Scholar

Hartzwell, 2018 J. Hartzwell

A neuroscientist explores the “Sanskrit effect

Scientific American (2018)

Jan 2, 2018

Google Scholar

Henderson, 1991 A. Henderson

The evidence continues to grow: Parent involvement improves student achievement

National Committee for Citizens in Education, Washington, D.C (1991)

Google Scholar

Hill and AuthorAnonymous, 2004 N. Hill, et al.

Parent academic involvement as related to school behavior, achievement, and aspirations: Demographic variation across adolescence

Child Development, 75 (5) (2004), pp. 1491-1509

View in Scopus Google Scholar

Hwang, 2003 G. Hwang

Definition, framework and research issues of smart learning environments – a context-aware learning perspective

Smart Learning Environments, 1 (4) (2014)

Nov 2014

Google Scholar

Hwang, 2020 G. Hwang

Vision, challenges, roles and research of artificial intelligence in education

Computers & Education: Artificial Intelligence, 1 (2020), p. 100001

2020

View PDF View article View in Scopus Google Scholar

Iiyoshi and Vijay Kumar, 2010 T. Iiyoshi, M.S. Vijay Kumar

Iiyoshi, V. Kumar (Eds.), Opening up education: The collective advancement of eucation through open technology, open content and open knowledge, MIT Press (2010)

2010

Google Scholar

Janda et al., 2019 H.K. Janda, A. Pawar, S. Du, V. Mago

Syntactic, semantic and sentiment analysis: The joint effect on automated essay evaluation

IEEE Access, 7 (2019), pp. 108486-108503

2019

View at publisher Crossref View in Scopus Google Scholar

Johnson et al., 2009 B.G. Johnson, F. Phillips, L.G. Chase

An intelligent tutoring system for the accounting cycle: Enhancing textbook homework with artificial intelligence

Journal of Accounting Education, 27 (2009), pp. 30-39

2009

View PDF View article View in Scopus Google Scholar

Kamal et al., 2013 N.F. Kamal, N.H. Mahmood, N.A. Zakaria

Modeling brain activities during reading working memory task : Comparison between reciting Quran and reading book

Procedia – Social and Behavioral Sciences, 97 (2013), pp. 83-89

2013

View PDF View article Google Scholar

Kao, 2004 G. Kao

Parental influences on the educational outcomes of immigrant youth

International Migration Review, 38 (2004), pp. 427-450

View at publisher Crossref View in Scopus Google Scholar

Kolowich, 2014 S. Kolowich

Writing instructor, skeptical of automated grading, pits machine vs. machine

The Chronicle of Higher Education (2014 April 28)

Google Scholar

Lee and Bowen, 2006 J. Lee, N. Bowen

Parent involvement, cultural capital, and the achievement gap among elementary school children

American Educational Research Journal, 43 (2) (2006), pp. 193-218

View at publisher Crossref View in Scopus Google Scholar

Marcus, 2020 G. Marcus

The next decade in AI: Four steps towards robust artificial intelligence

https://arxiv.org/abs/2002.06177 (2020)

arXiv.org

Google Scholar

Mayer, 2010 R.E. Mayer

Applying the science of learning to medical education

Medical Education, 44 (2010), pp. 543-549

2010

View at publisher Crossref View in Scopus Google Scholar

McNeal, 2014 R.E. McNeal Jr.

Parent involvement, academic achievement and the role of student attitudes and behaviors as mediators

Universal Journal of Educational Research, 2 (8) (2014), pp. 564-576,

10.13189/ujer.2014

2014

http://www.hrpub.org

View at publisher Google Scholar

Muller, 1998 C. Muller

Gender differences in parental involvement and adolescents’ mathematics achievement

Sociology of Education, 71 (1998), pp. 336-356

View at publisher Crossref View in Scopus Google Scholar

Nickel et al., 2016 M. Nickel, et al.

A review of relational machine learning for knowledge graphs

Proceedings of the IEEE, 104 (1) (2016), pp. 11-33

Jan. 2016

View in Scopus Google Scholar

Novak and Canas, 2008 J.D. Novak, A.J. Canas

The theory underlying concept maps and how to construct and use them

Technical Report IHMC (2008)

Google Scholar

Paller and Wagner, 2002 K.A. Paller, A.D. Wagner

Observing the transformation of experience into memory

Trends in Cognitive Sciences, 6 (2002), pp. 93-102

2002

View PDF View article View in Scopus Google Scholar

Pass, 2004 S. Pass

Parallel paths to constructivism: Jean Piaget and Lev Vygotsky

1593111452, Information Age Publishing (2004)

Google Scholar

Patel, 2006 N. Patel

Perceptions of student ability: Effects on parent involvement in middle school